- Pip how to install curl in docker update#

- Pip how to install curl in docker upgrade#

- Pip how to install curl in docker code#

Java is a registered trademark of Oracle and/or its affiliates.$ docker build -t python-with-syslog. For details, see the Google Developers Site Policies.

Pip how to install curl in docker code#

Tensorflow-serving-api PIP package using: pip install tensorflow-serving-apiĮxcept as otherwise noted, the content of this page is licensed under the Creative Commons Attribution 4.0 License, and code samples are licensed under the Apache 2.0 License. To run Python client code without the need to build the API, you can install the TensorFlow Serving Python API PIP package Provided Docker images, or following the approach in In order to build a custom version of TensorFlow Serving with GPU support, we Version of TensorFlow Serving for your processor if you are in doubt. Use the default -config=nativeopt to build an optimized Note: These instruction sets are not available on all machines, especially with Wherever you see bazel build in the documentation, simply add theįor example: tools/run_in_docker.sh bazel build -copt=-mavx2 tensorflow_serving/. It's also possible to compile using specific instruction sets (e.g. config=nativeopt to Bazel build commands when building TensorFlow Serving.įor example: tools/run_in_docker.sh bazel build -config=nativeopt tensorflow_serving/. Platform-specific instruction sets for your processor, you can add If you'd like to apply generally recommended optimizations, including utilizing $ tools/run_in_docker.sh -d tensorflow/serving:1.10-devel \ Passing the run_in_docker.sh script the Docker development image we'd like toįor example, to build version 1.10 of TensorFlow Serving: $ git clone -b r1.10 We will also want to match the build environment for that branch of code, by If you want to build from a specific branch (such as a release branch), pass -b Building specific versions of TensorFlow Serving

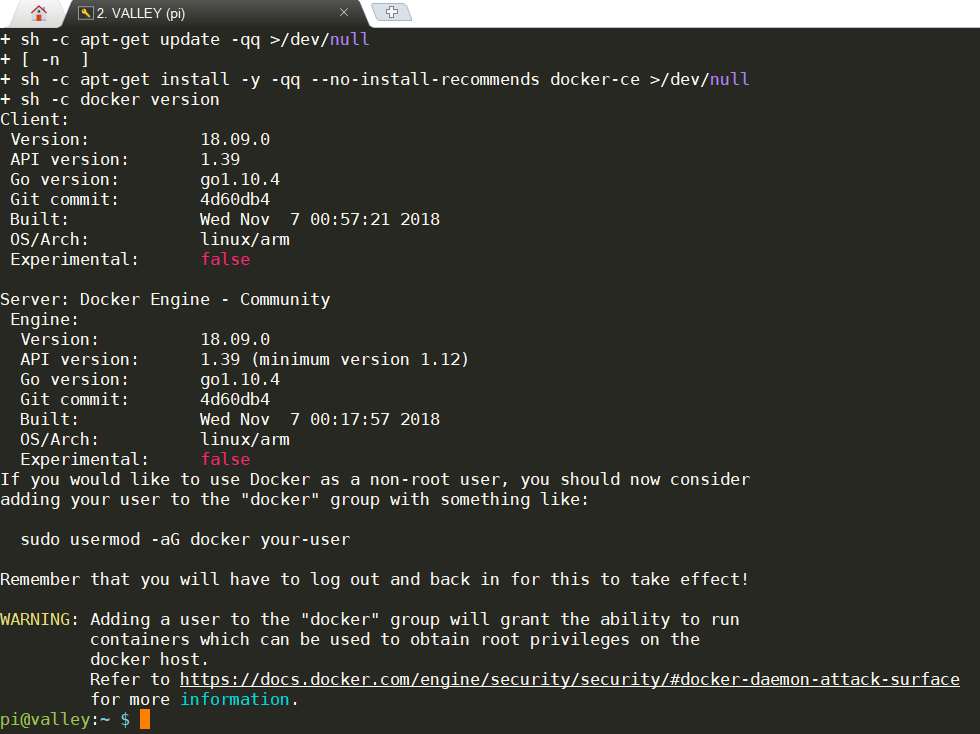

Tutorial for more in-depth examples of running TensorFlow To test your build, execute: tools/run_in_docker.sh bazel test -c opt tensorflow_serving/. Like: bazel-bin/tensorflow_serving/model_servers/tensorflow_model_server To build the entire tree, execute: tools/run_in_docker.sh bazel build -c opt tensorflow_serving/.īinaries are placed in the bazel-bin directory, and can be run using a command You can use Bazel commands toīuild individual targets or the entire source tree. TensorFlow Serving uses Bazel as its build tool. By default, the script will build with the latest In order to build in a hermetic environment with all dependencies taken care of, Will use Git to clone the master branch of TensorFlow Serving: git clone Clone the build scriptĪfter installing Docker, we need to get the source we want to build from. Note: Currently we only support building binaries that run on Linux. You need to build your own version of TensorFlow Serving.įor a listing of what these dependencies are, see the TensorFlow Serving TensorFlow Serving Docker development images encapsulate all the dependencies The recommended approach to building from source is to use Docker.

Tensorflow-model-server-universal if your processor does not support AVX Note: In the above commands, replace tensorflow-model-server with

Pip how to install curl in docker upgrade#

You can upgrade to a newer version of tensorflow-model-server with: apt-get upgrade tensorflow-model-server Once installed, the binary can be invoked using the command

Pip how to install curl in docker update#

Install and update TensorFlow ModelServer apt-get update & apt-get install tensorflow-model-server Note that the binary name is the same for both packages, so if youĪlready installed tensorflow-model-server, you should first uninstall it using apt-get remove tensorflow-model-serverĪdd TensorFlow Serving distribution URI as a package source (one time setup) echo "deb stable tensorflow-model-server tensorflow-model-server-universal" | sudo tee /etc/apt//tensorflow-serving.list & \ Use this if tensorflow-model-server does not workįor you. Tensorflow-model-server-universal: Compiled with basic optimizations, butĭoesn't include platform specific instruction sets, so should work on most if

The preferred option for most users, but may not work on some older machines. Specific compiler optimizations like SSE4 and AVX instructions. Tensorflow-model-server: Fully optimized server that uses some platform The TensorFlow Serving ModelServer binary is available in two variants: TIP: This is also the easiest way to get TensorFlow Serving working with GPU Specific needs that are not addressed by running in a container. We highly recommend this route unless you have The easiest and most straight-forward way of using TensorFlow Serving is withĭocker images. Installing ModelServer Installing using Docker

0 kommentar(er)

0 kommentar(er)